菜单

一、前言

Java中比较流行的搜索引擎是Elasticsearch,传统的数据库搜索,使用like’关键字%’,当内容过多时性能会大大降低,所以Elasticsearch就出现了。

下面,记录下Linux下Elasticsearch的安装过程,基于大模型+Elasticsearch实现全文搜索

下面是ubuntu24.04例子

二、安装Elasticsearch

2.1、ubuntu安装java

# Update package index

shell>>>sudo apt update

shell>>>sudo apt install openjdk-11-jdk

shell>>>sudo java --version

openjdk 11.0.28 2025-07-15

OpenJDK Runtime Environment (build 11.0.28+6-post-Ubuntu-1ubuntu124.04.1)

OpenJDK 64-Bit Server VM (build 11.0.28+6-post-Ubuntu-1ubuntu124.04.1, mixed mode, sharing)2.2、Linux下安装Elasticsearch

2.2.1下载和解压安装包

###下载和解压安装包

官网下载地址: https://www.elastic.co/cn/downloads/elasticsearch

###也可以通过linux命令行,直接下载

shell>>>wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-9.2.0-linux-x86_64.tar.gz执行解压缩命令:

shell>>>sudo tar -zxvf elasticsearch-9.2.0-linux-x86_64.tar.gz

shell>>>sudo mv elasticsearch-9.2.0 /usr/local/elasticsearch解决es强依赖jdk问题–无报错可忽略

注:如果Linux服务本来没有配置jdk,则会直接使用es目录下默认的jdk,反而不会报错

warning: usage of JAVA_HOME is deprecated, use ES_JAVA_HOME

Future versions of Elasticsearch will require Java 11; your Java version from [/usr/local/jdk1.8.0_291/jre] does not meet this requirement. Consider switching to a distribution of Elasticsearch with a bundled JDK. If you are already using a distribution with a bundled JDK, ensure the JAVA_HOME environment variable is not set.

解决办法:

shell>>>cd /usr/local/elasticsearch/bin

# 将jdk修改为es中自带jdk的配置目录

export JAVA_HOME=/usr/local/elasticsearch/jdk

export PATH=$JAVA_HOME/bin:$PATH

if [ -x "$JAVA_HOME/bin/java" ]; then

JAVA="/usr/local/elasticsearch/jdk/bin/java"

else

JAVA=`which java`

fi解决内存不足问题—无报错可忽略

由于 elasticsearch 默认分配 jvm空间大小为2g,修改 jvm空间,如果Linux服务器本来配置就很高,可以不用修改。

error:

OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00000000c6a00000, 962592768, 0) failed; error='Not enough space' (errno=12)

at org.elasticsearch.tools.launchers.JvmOption.flagsFinal(JvmOption.java:119)

at org.elasticsearch.tools.launchers.JvmOption.findFinalOptions(JvmOption.java:81)

at org.elasticsearch.tools.launchers.JvmErgonomics.choose(JvmErgonomics.java:38)

at org.elasticsearch.tools.launchers.JvmOptionsParser.jvmOptions(JvmOptionsParser.java:13

进入config文件夹开始配置,编辑jvm.options:

shell>>>cd /usr/local/elasticsearch/config

shell>>>vim jvm.options

默认配置如下:

-Xms2g

-Xmx2g

默认的配置占用内存太多了,调小一些:

-Xms256m

-Xmx256m2.2.2创建专用用户启动ES

创建专用用户启动ES

root用户不能直接启动Elasticsearch,所以需要创建一个专用用户,来启动ES

java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:101)

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:168)

at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:397)

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:159)

at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:150)

at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:75)

at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:116)

at org.elasticsearch.cli.Command.main(Command.java:79)

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:115)

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:81)

创建用户

shell>>sudo useradd user-es

创建所属组:

shell>>sudo chown user-es:user-es -R /usr/local/elasticsearch

shell>>sudo mkdir -p /home/user-es/elasticsearch/logs

shell>>sudo mkdir -p /home/user-es/elasticsearch/data

shell>>sudo chown user-es:user-es -R /home/user-es/elasticsearch/logs

shell>>sudo chown user-es:user-es -R /home/user-es/elasticsearch/data

设置用户密码

shell>>sudo passwd user-es

切换到user-es用户

shell>>sudo -u user-es bash

进入bin目录

shell>>>cd /usr/local/elasticsearch/bin

修改ES核心配置信息

执行命令修改elasticsearch.yml文件内容

shell>vim /usr/local/elasticsearch/config/elasticsearch.yml

修改数据和日志目录

这里可以不用修改,如果不修改,默认放在elasticsearch根目录下

# 数据目录位置

path.data: /home/新用户名称user-es/elasticsearch/data

# 日志目录位置

path.logs: /home/新用户名称user-es/elasticsearch/logs

修改绑定的ip允许远程访问

#默认只允许本机访问,修改为0.0.0.0后则可以远程访问

# 绑定到0.0.0.0,允许任何ip来访问

network.host: 0.0.0.0

##初始化节点名称

cluster.name: elasticsearch

node.name: es-node0

cluster.initial_master_nodes: ["es-node0"]

修改端口号(非必须)

http.port: 9200

------

vm.max_map_count [65530] is too low问题

ERROR: [1] bootstrap checks failed. You must address the points described in the following [1] lines before starting Elasticsearch.

bootstrap check failure [1] of [1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

elasticsearch用户拥有的内存权限太小,至少需要262144,解决办法:

在 /etc/sysctl.conf 文件最后添加如下内容,即可永久修改

切换到root用户

执行命令:

su root

vim /etc/sysctl.conf

添加如下内容

vm.max_map_count=262144

保存退出,刷新配置文件

shell》》sysctl -p

切换user-es用户,继续启动

shell>>>sudo -u user-es bash2.2.3 启动es服务

shell》》/usr/local/elasticsearch/bin/elasticsearch

✅ Elasticsearch security features have been automatically configured!

✅ Authentication is enabled and cluster connections are encrypted.

ℹ️ Password for the elastic user (reset with `bin/elasticsearch-reset-password -u elastic`):

*EsWbPQwj7BmAONEtyjn

ℹ️ HTTP CA certificate SHA-256 fingerprint:

3b3d6de2dea7fabb238d08a1a0a24c58d2f4cf7086d5cfdc6355d86e8f0ffda3

ℹ️ Configure Kibana to use this cluster:

• Run Kibana and click the configuration link in the terminal when Kibana starts.

• Copy the following enrollment token and paste it into Kibana in your browser (valid for the next 30 minutes):

eyJ2ZXIiOiI4LjE0LjAiLCJhZHIiOlsiMTkyLjE2OC42MC4xMzI6OTIwMCJdLCJmZ3IiOiIzYjNkNmRlMmRlYTdmYWJiMjM4ZDA4YTFhMGEyNGM1OGQyZjRjZjcwODZkNWNmZGM2MzU1ZDg2ZThmMGZmZGEzIiwia2V5IjoiV0s5WVlab0JRWW1kSzNicEtUbmQ6MXVPNmhFeUp2LXRyNk5wQ0FVdktsZyJ9

ℹ️ Configure other nodes to join this cluster:

• Copy the following enrollment token and start new Elasticsearch nodes with `bin/elasticsearch --enrollment-token <token>` (valid for the next 30 minutes):

eyJ2ZXIiOiI4LjE0LjAiLCJhZHIiOlsiMTkyLjE2OC42MC4xMzI6OTIwMCJdLCJmZ3IiOiIzYjNkNmRlMmRlYTdmYWJiMjM4ZDA4YTFhMGEyNGM1OGQyZjRjZjcwODZkNWNmZGM2MzU1ZDg2ZThmMGZmZGEzIiwia2V5IjoiV3E5WVlab0JRWW1kSzNicEtUbmc6OUhGQTFac2FsMnp1TzBTb2UxakNzQSJ9

If you're running in Docker, copy the enrollment token and run:

`docker run -e "ENROLLMENT_TOKEN=<token>" docker.elastic.co/elasticsearch/elasticsearch:9.2.0`

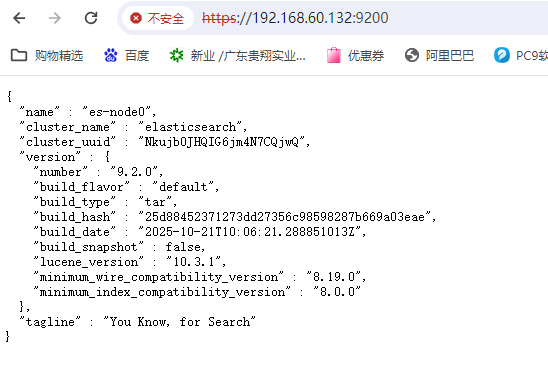

启动成功后,可以通过https://127.0.0.1:9200/访问,如果出现以下内容,说明ES安装成功:

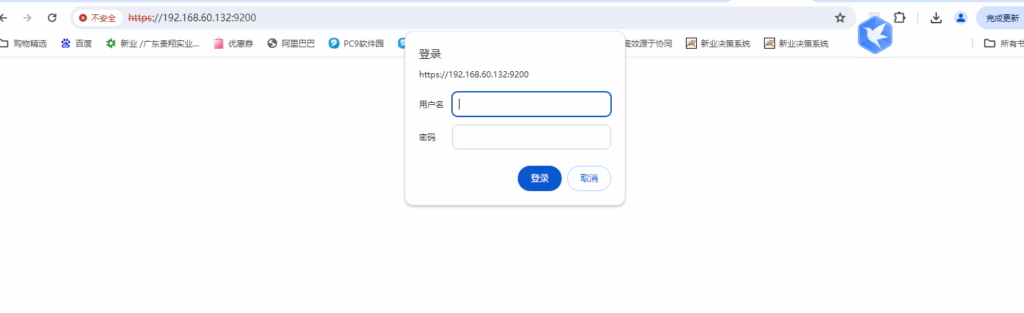

输入账号密码:elastic、*EsWbPQwj7BmAONEtyjn

{

"name" : "es-node0",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "Nkujb0JHQIG6jm4N7CQjwQ",

"version" : {

"number" : "9.2.0",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "25d88452371273dd27356c98598287b669a03eae",

"build_date" : "2025-10-21T10:06:21.288851013Z",

"build_snapshot" : false,

"lucene_version" : "10.3.1",

"minimum_wire_compatibility_version" : "8.19.0",

"minimum_index_compatibility_version" : "8.0.0"

},

"tagline" : "You Know, for Search"

}可能遇到的max file descriptors [4096]问题

切换到root用户,执行命令:

vi /etc/security/limits.conf

添加如下内容:

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

然后重启linux2.2.4ES服务的启动与停止

前台运行,Ctrl + C 则程序终止

shell>>>/usr/local/elasticsearch/bin/elasticsearch

后台运行

shell>>>/usr/local/elasticsearch/bin/elasticsearch -d

使用 Systemd 管理服务(推荐)

shell>>vim /etc/systemd/system/elasticsearch.service

[Unit]

Description=Elasticsearch

After=network.target

[Service]

User=user-es

Group=user-es

ExecStart=/usr/local/elasticsearch/bin/elasticsearch

Restart=always

LimitNOFILE=65535

LimitMEMLOCK=infinity

[Install]

WantedBy=multi-user.target

shell>>>systemctl daemon-reload

shell>>systemctl start elasticsearch

shell>>>systemctl enable elasticsearch

shell>>>journalctl -u elasticsearch.service --lines=100三、安装分词器

3.1 下载ik分词器

下载地址:https://github.com/medcl/elasticsearch-analysis-ik/releases ,这里你需要根据你的Es的版本来下载对应版本的IK,这里我使用的是9.2.0的ES,所以就下载ik-9.2.0.zip的文件。

3.1.1:确定Elasticsearch版本

{

"name" : "es-node0",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "Nkujb0JHQIG6jm4N7CQjwQ",

"version" : {

"number" : "9.2.0",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "25d88452371273dd27356c98598287b669a03eae",

"build_date" : "2025-10-21T10:06:21.288851013Z",

"build_snapshot" : false,

"lucene_version" : "10.3.1",

"minimum_wire_compatibility_version" : "8.19.0",

"minimum_index_compatibility_version" : "8.0.0"

},

"tagline" : "You Know, for Search"

}可以看到我们的版本是9.2.0

3.1.2步骤2:下载对应版本的IK分词器

shell>>>cd /usr/local/elasticsearch/plugins

shell>>mkdir ik

shell>>cd ik

shell>>wget https://release.infinilabs.com/analysis-ik/stable/elasticsearch-analysis-ik-9.2.0.zip

shell>sudo unzip elasticsearch-analysis-ik-9.2.0.zip

shell>>rm -rf elasticsearch-analysis-ik-9.2.0.zip

shell>>chown -R user-es:user-es /usr/local/elasticsearch/plugins/ik/

shell>>chown -R 755 /usr/local/elasticsearch/plugins/ik/

shell>>>ls -la /usr/local/elasticsearch/plugins/ik/应该看到类似这样的文件结构:

[root@localhost ik]# ls -la /usr/local/elasticsearch/plugins/ik/

total 6076

drwxr-xr-x 3 755 user-es 4096 Nov 8 11:07 .

drwxr-xr-x 3 user-es user-es 4096 Nov 8 11:07 ..

-rw-r--r-- 1 755 user-es 335042 Oct 24 16:44 commons-codec-1.11.jar

-rw-r--r-- 1 755 user-es 61829 Oct 24 16:44 commons-logging-1.2.jar

drwxr-xr-x 2 755 user-es 4096 Oct 24 16:44 config

-rw-r--r-- 1 755 user-es 7502 Oct 24 16:45 elasticsearch-analysis-ik-9.2.0.jar

-rw-r--r-- 1 755 user-es 4619088 Nov 8 11:04 elasticsearch-analysis-ik-9.2.0.zip

-rw-r--r-- 1 755 user-es 92 Oct 24 16:44 entitlement-policy.yaml

-rw-r--r-- 1 755 user-es 780321 Oct 24 16:44 httpclient-4.5.13.jar

-rw-r--r-- 1 755 user-es 328593 Oct 24 16:44 httpcore-4.4.13.jar

-rw-r--r-- 1 755 user-es 50049 Oct 24 16:45 ik-core-1.0.jar

-rw-r--r-- 1 755 user-es 1800 Oct 24 16:44 plugin-descriptor.properties

-rw-r--r-- 1 755 user-es 125 Oct 24 16:44 plugin-security.policy

步骤4:重启Elasticsearch

shell>>systemctl restart elasticsearch

shell>>systemctl status elasticsearch

# 查看启动日志,确认IK插件加载成功

tail -f /var/log/elasticsearch/elasticsearch.log

[root@localhost ik]# journalctl -u elasticsearch.service |grep analysis-ik

11月 02 15:40:40 localhost.localdomain elasticsearch[2149]: [2025-11-02T15:40:40,959][INFO ][o.e.p.PluginsService ] [localhost.localdomain] loaded plugin [analysis-ik]

11月 02 15:40:46 loc3.2 验证IK分词器安装

/usr/share/elasticsearch/bin/elasticsearch-plugin list

[root@localhost bin]# /usr/local/elasticsearch/bin/elasticsearch-plugin list

warning: ignoring JAVA_HOME=/usr/lib/jvm/java-11-openjdk; using bundled JDK

ik3.3 测试IK分词器

创建索引

shell>>>curl -k -u elastic:*EsWbPQwj7BmAONEtyjn -X PUT "https://localhost:9200/oafile" -H 'Content-Type: application/json' -d'

{

"settings": {

"analysis": {

"analyzer": {

"ik_max_word": {

"type": "custom",

"tokenizer": "ik_max_word"

},

"ik_smart": {

"type": "custom",

"tokenizer": "ik_smart"

}

}

}

}

}'

====输出====

{"acknowledged":true,"shards_acknowledged":true,"index":"oafile"}

###查看索引

shell>>>curl -k -u elastic:*EsWbPQwj7BmAONEtyjn -X GET "https://localhost:9200/_cat/indices?v"

====输出=====

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size dataset.size

yellow open oafile uIdN2RQUTbuQe7gEOKhbgw 1 1 0 0 227b 227b 227b####密码胡 ik_smart分词测试

shell>>>curl -k -u elastic:*EsWbPQwj7BmAONEtyjn -X POST "https://localhost:9200/oafile/_analyze" -H 'Content-Type: application/json' -d'

{

"analyzer": "ik_smart",

"text": "中华人民共和国国歌"

}'

====输出

{"tokens":[{"token":"中华人民共和国","start_offset":0,"end_offset":7,"type":"CN_WORD","position":0},{"token":"国歌","start_offset":7,"end_offset":9,"type":"CN_WORD","position":1}]}

##### 测试ik_max_word分词

shell>>>curl -k -u elastic:*EsWbPQwj7BmAONEtyjn -X POST "https://localhost:9200/oafile/_analyze" -H 'Content-Type: application/json' -d'

{

"analyzer": "ik_max_word",

"text": "中华人民共和国国歌"

}'

=====输出====

{"tokens":[{"token":"中华人民共和国","start_offset":0,"end_offset":7,"type":"CN_WORD","position":0},{"token":"中华人民","start_offset":0,"end_offset":4,"type":"CN_WORD","position":1},{"token":"中华","start_offset":0,"end_offset":2,"type":"CN_WORD","position":2},{"token":"华人","start_offset":1,"end_offset":3,"type":"CN_WORD","position":3},{"token":"人民共和国","start_offset":2,"end_offset":7,"type":"CN_WORD","position":4},{"token":"人民","start_offset":2,"end_offset":4,"type":"CN_WORD","position":5},{"token":"共和国","start_offset":4,"end_offset":7,"type":"CN_WORD","position":6},{"token":"共和","start_offset":4,"end_offset":6,"type":"CN_WORD","position":7},{"token":"国","start_offset":6,"end_offset":7,"type":"CN_CHAR","position":8},{"token":"国歌","start_offset":7,"end_offset":9,"type":"CN_WORD","position":9}]}

##### 测试复杂中文

shell>>>curl -k -u elastic:*EsWbPQwj7BmAONEtyjn -X POST "https://localhost:9200/oafile/_analyze" -H 'Content-Type: application/json' -d'

{

"analyzer": "ik_max_word",

"text": "我在北京的清华大学学习计算机科学和技术"

}'

# 测试中英文混合

sehll>>>curl -k -u elastic:*EsWbPQwj7BmAONEtyjn -X POST "https://localhost:9200/oafile/_analyze" -H 'Content-Type: application/json' -d'

{

"analyzer": "ik_max_word",

"text": "我使用Elasticsearch和IK分词器构建搜索系统"

}'

====输出

{"tokens":[{"token":"我","start_offset":0,"end_offset":1,"type":"CN_CHAR","position":0},{"token":"使用","start_offset":1,"end_offset":3,"type":"CN_WORD","position":1},{"token":"elasticsearch","start_offset":3,"end_offset":16,"type":"ENGLISH","position":2},{"token":"和","start_offset":16,"end_offset":17,"type":"CN_CHAR","position":3},{"token":"ik","start_offset":17,"end_offset":19,"type":"ENGLISH","position":4},{"token":"分词器","start_offset":19,"end_offset":22,"type":"CN_WORD","position":5},{"token":"分词","start_offset":19,"end_offset":21,"type":"CN_WORD","position":6},{"token":"器","start_offset":21,"end_offset":22,"type":"CN_CHAR","position":7},{"token":"构建","start_offset":22,"end_offset":24,"type":"CN_WORD","position":8},{"token":"搜索","start_offset":24,"end_offset":26,"type":"CN_WORD","position":9},{"token":"系统","start_offset":26,"end_offset":28,"type":"CN_WORD","position":10}]}

--------使用CA证书测试

# 首先找到CA证书位置

find /etc/elasticsearch -name "*.crt" 2>/dev/null

# 或者

find /usr/share/elasticsearch -name "*.crt" 2>/dev/null

# 通常证书在以下位置:

# /etc/elasticsearch/certs/http_ca.crt

ik_max_word:会将文本做最细粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,中华人民,中华,华人,人民共和国,人民,人,民,共和国,共和,和,国国,国歌”,会穷尽各种可能的组合;

ik_smart:会做最粗粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,国歌”。如下:

4、安装Kibana

Kibana是一个基于Node.js的Elasticsearch索引库数据统计工具,可以利用Elasticsearch的聚合功能,生成各种图表,如柱形图,线状图,饼图等。而且还提供了操作Elasticsearch索引数据的控制台,并且提供了一定的API提示,非常有利于我们学习Elasticsearch的语法。

4.1 下载Kibana

需要选择和es版本一致的版本的kibana下载,下载地址:https://www.elastic.co/downloads/past-releases#kibana

shell>>>cd /data/resource

shell>>>sudo wget https://artifacts.elastic.co/downloads/kibana/kibana-9.2.0-linux-x86_64.tar.gz

shell>>sudo tar -zxvf kibana-9.2.0-linux-x86_64.tar.gz

shell>>sudo mv kibana-9.2.0 kibana

shell>>sudo cp kibana /usr/local/

####复制elasticsearch证书到kibana

shell>>sudo cp /usr/local/elasticsearch/config/certs/http_ca.crt /usr/local/kibana/

###创建elasticsearch的kibana的token

shell>>sudo -u user-es bash

shell>>>/usr/local/elasticsearch/bin/elasticsearch-service-tokens create elastic/kibana kibana-token

#####输出

SERVICE_TOKEN elastic/kibana/kibana-token = AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpPUmlXWnpCVVN5UzZBeGk5Nk9zaFpB4.2修改kibana的配置文件config/kibana.yml

shell>>sudo vim /usr/local/kibana/config/kibana.yml

#配置端口号

server.port: 5601

#配置网络访问地址

server.host: "0.0.0.0"

server.publicBaseUrl: "http://192.168.60.132:5601"

#配置es链接地址(es集群,可以用逗号分隔)

#配置 Kibana 使用证书:

elasticsearch.hosts: ["https://192.168.179.68:9200"]

elasticsearch.ssl.certificateAuthorities: ["/usr/local/kibana/http_ca.crt"]

elasticsearch.ssl.verificationMode: certificate

#配置中文语言界面

i18n.locale: "zh-CN"

elasticsearch.serviceAccountToken: "AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpPUmlXWnpCVVN5UzZBeGk5Nk9zaFpB"

###最终文件内容

panwx@panwx-VMware-Virtual-Platform:/usr/local$ cat /usr/local/kibana/config/kibana.yml

# For more configuration options see the configuration guide for Kibana in

# https://www.elastic.co/guide/index.html

# =================== System: Kibana Server ===================

# Kibana is served by a back end server. This setting specifies the port to use.

server.port: 5601

# Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "0.0.0.0"

# Enables you to specify a path to mount Kibana at if you are running behind a proxy.

# Use the `server.rewriteBasePath` setting to tell Kibana if it should remove the basePath

# from requests it receives, and to prevent a deprecation warning at startup.

# This setting cannot end in a slash.

#server.basePath: ""

# Specifies whether Kibana should rewrite requests that are prefixed with

# `server.basePath` or require that they are rewritten by your reverse proxy.

# Defaults to `false`.

#server.rewriteBasePath: false

# Specifies the public URL at which Kibana is available for end users. If

# `server.basePath` is configured this URL should end with the same basePath.

server.publicBaseUrl: "http://192.168.60.132:5601"

# The maximum payload size in bytes for incoming server requests.

#server.maxPayload: 1048576

# The Kibana server's name. This is used for display purposes.

#server.name: "your-hostname"

# =================== System: Kibana Server (Optional) ===================

# Enables SSL and paths to the PEM-format SSL certificate and SSL key files, respectively.

# These settings enable SSL for outgoing requests from the Kibana server to the browser.

#server.ssl.enabled: false

#server.ssl.certificate: /path/to/your/server.crt

#server.ssl.key: /path/to/your/server.key

# =================== System: Elasticsearch ===================

# The URLs of the Elasticsearch instances to use for all your queries.

elasticsearch.hosts: ["https://127.0.0.1:9200"]

# If your Elasticsearch is protected with basic authentication, these settings provide

# the username and password that the Kibana server uses to perform maintenance on the Kibana

# index at startup. Your Kibana users still need to authenticate with Elasticsearch, which

# is proxied through the Kibana server.

#elasticsearch.username: "kibana_system"

#elasticsearch.password: "pass"

# Kibana can also authenticate to Elasticsearch via "service account tokens".

# Service account tokens are Bearer style tokens that replace the traditional username/password based configuration.

# Use this token instead of a username/password.

elasticsearch.serviceAccountToken: "AAEAAWVsYXN0aWMva2liYW5hL2tpYmFuYS10b2tlbjpPUmlXWnpCVVN5UzZBeGk5Nk9zaFpB"

# Time in milliseconds to wait for Elasticsearch to respond to pings. Defaults to the value of

# the elasticsearch.requestTimeout setting.

#elasticsearch.pingTimeout: 1500

# Time in milliseconds to wait for responses from the back end or Elasticsearch. This value

# must be a positive integer.

#elasticsearch.requestTimeout: 30000

# The maximum number of sockets that can be used for communications with elasticsearch.

# Defaults to `800`.

#elasticsearch.maxSockets: 1024

# Specifies whether Kibana should use compression for communications with elasticsearch

# Defaults to `false`.

#elasticsearch.compression: false

# List of Kibana client-side headers to send to Elasticsearch. To send *no* client-side

# headers, set this value to [] (an empty list).

#elasticsearch.requestHeadersWhitelist: [ authorization ]

# Header names and values that are sent to Elasticsearch. Any custom headers cannot be overwritten

# by client-side headers, regardless of the elasticsearch.requestHeadersWhitelist configuration.

#elasticsearch.customHeaders: {}

# Time in milliseconds for Elasticsearch to wait for responses from shards. Set to 0 to disable.

#elasticsearch.shardTimeout: 30000

# =================== System: Elasticsearch (Optional) ===================

# These files are used to verify the identity of Kibana to Elasticsearch and are required when

# xpack.security.http.ssl.client_authentication in Elasticsearch is set to required.

#elasticsearch.ssl.certificate: /path/to/your/client.crt

#elasticsearch.ssl.key: /path/to/your/client.key

# Enables you to specify a path to the PEM file for the certificate

# authority for your Elasticsearch instance.

elasticsearch.ssl.certificateAuthorities: [ "/usr/local/kibana/http_ca.crt" ]

# To disregard the validity of SSL certificates, change this setting's value to 'none'.

elasticsearch.ssl.verificationMode: certificate

# =================== System: Logging ===================

# Set the value of this setting to off to suppress all logging output, or to debug to log everything. Defaults to 'info'

#logging.root.level: debug

# Enables you to specify a file where Kibana stores log output.

#logging.appenders.default:

# type: file

# fileName: /var/logs/kibana.log

# layout:

# type: json

# Example with size based log rotation

#logging.appenders.default:

# type: rolling-file

# fileName: /var/logs/kibana.log

# policy:

# type: size-limit

# size: 256mb

# strategy:

# type: numeric

# max: 10

# layout:

# type: json

# Logs queries sent to Elasticsearch.

#logging.loggers:

# - name: elasticsearch.query

# level: debug

# Logs http responses.

#logging.loggers:

# - name: http.server.response

# level: debug

# Logs system usage information.

#logging.loggers:

# - name: metrics.ops

# level: debug

# Enables debug logging on the browser (dev console)

#logging.browser.root:

# level: debug

# =================== System: Other ===================

# The path where Kibana stores persistent data not saved in Elasticsearch. Defaults to data

#path.data: data

# Specifies the path where Kibana creates the process ID file.

#pid.file: /run/kibana/kibana.pid

# Set the interval in milliseconds to sample system and process performance

# metrics. Minimum is 100ms. Defaults to 5000ms.

#ops.interval: 5000

# Specifies locale to be used for all localizable strings, dates and number formats.

# Supported languages are the following: English (default) "en", Chinese "zh-CN", Japanese "ja-JP", French "fr-FR", German "de-DE".

i18n.locale: "zh-CN"

# =================== Frequently used (Optional)===================

# =================== Saved Objects: Migrations ===================

# Saved object migrations run at startup. If you run into migration-related issues, you might need to adjust these settings.

# The number of documents migrated at a time.

# If Kibana can't start up or upgrade due to an Elasticsearch `circuit_breaking_exception`,

# use a smaller batchSize value to reduce the memory pressure. Defaults to 1000 objects per batch.

#migrations.batchSize: 1000

# The maximum payload size for indexing batches of upgraded saved objects.

# To avoid migrations failing due to a 413 Request Entity Too Large response from Elasticsearch.

# This value should be lower than or equal to your Elasticsearch cluster’s `http.max_content_length`

# configuration option. Default: 100mb

#migrations.maxBatchSizeBytes: 100mb

# The number of times to retry temporary migration failures. Increase the setting

# if migrations fail frequently with a message such as `Unable to complete the [...] step after

# 15 attempts, terminating`. Defaults to 15

#migrations.retryAttempts: 15

# =================== Search Autocomplete ===================

# Time in milliseconds to wait for autocomplete suggestions from Elasticsearch.

# This value must be a whole number greater than zero. Defaults to 1000ms

#unifiedSearch.autocomplete.valueSuggestions.timeout: 1000

# Maximum number of documents loaded by each shard to generate autocomplete suggestions.

# This value must be a whole number greater than zero. Defaults to 100_000

#unifiedSearch.autocomplete.valueSuggestions.terminateAfter: 100000

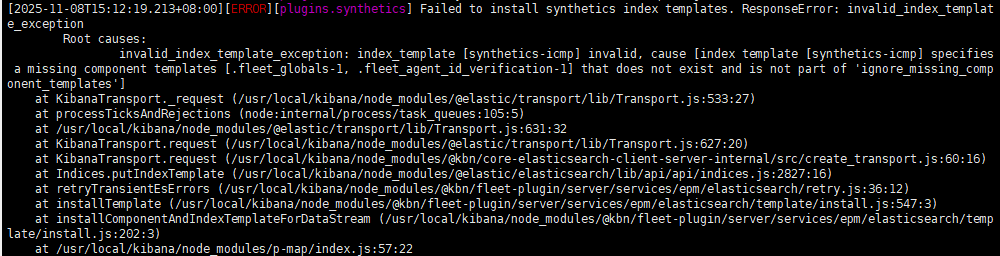

异常

synthetics]: [ResponseError: invalid_index_template_exception

Root causes:

invalid_index_template_exception: index_template [synthetics-tcp] invalid, cause [index template [synthetics-tcp] specifies a missing component templates [.fleet_globals-1, .fleet_agent_id_verification-1] that does not exist and is not part of 'ignore_missing_component_templates']]

[2025-11-08T15:03:29.731+08:00][ERROR][plugins.fleet] Uninstalling synthetics-1.4.2 after error installing: [ResponseError: invalid_index_template_exception

Root causes:

invalid_index_template_exception: index_template [synthetics-tcp] invalid, cause [index template [synthetics-tcp] specifies a missing component templates [.fleet_globals-1, .fleet_agent_id_verification-1] that does not exist and is not part of 'ignore_missing_component_templates']] with install type: install

#####异常1

panwx@panwx-VMware-Virtual-Platform:/usr/local/elasticsearch/config$ curl -X GET "https://localhost:9200/_component_template/.fleet_globals-1" -k -u elastic:*EsWbPQwj7BmAONEtyjn

{"error":{"root_cause":[{"type":"resource_not_found_exception","reason":"component template matching [.fleet_globals-1] not found"}],"type":"resource_not_found_exception","reason":"component template matching [.fleet_globals-1] not found"},"status":404}

######解决方案:

panwx@panwx-VMware-Virtual-Platform:/usr/local/elasticsearch/config$ curl -X PUT "https://localhost:9200/_component_template/.fleet_globals-1" -k -u elastic:*EsWbPQwj7BmAONEtyjn -H 'Content-Type: application/json' -d'

{

"template": {

"settings": {

"index": {

"number_of_shards": 1,

"number_of_replicas": 0

}

},

"mappings": {

"_meta": {

"package": {

"name": "fleet"

},

"version": "1.0.0"

}

}

},

"version": 1

}'

{"acknowledged":true}

######异常2

panwx@panwx-VMware-Virtual-Platform:/usr/local/elasticsearch/config$ curl -X GET "https://localhost:9200/_component_template/.fleet_agent_id_verification-1" -k -u elastic:*EsWbPQwj7BmAONEtyjn

{"error":{"root_cause":[{"type":"resource_not_found_exception","reason":"component template matching [.fleet_agent_id_verification-1] not found"}],"type":"resource_not_found_exception","reason":"component template matching [.fleet_agent_id_verification-1] not found"},"status":404}

######解决方案

panwx@panwx-VMware-Virtual-Platform:/usr/local/elasticsearch/config$ curl -X PUT "https://localhost:9200/_component_template/.fleet_agent_id_verification-1" -k -u elastic:*EsWbPQwj7BmAONEtyjn -H 'Content-Type: application/json' -d'

{

"template": {

"mappings": {

"_meta": {

"package": {

"name": "fleet"

},

"version": "1.0.0"

},

"properties": {

"agent_id": {

"type": "keyword"

},

"verified_at": {

"type": "date"

}

}

}

},

"version": 1

}'

启动

shell>>>cd /usr/local/kibana/bin

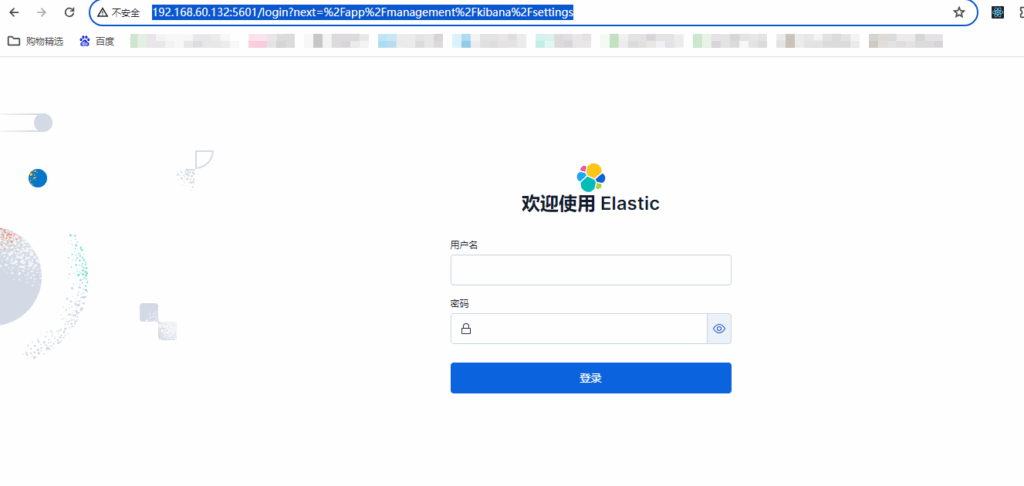

shell>>>./kibana访问

http://192.168.60.132:5601